Extracting all URLs of your sitemap.xml with JavaScript

by Christoph Schiessl on JavaScript

Automation is always preferable over manual work, and on-page SEO optimization is no exception. But, before you can automate anything, you need a way to get a list of your website's pages so you can programmatically check certain aspects of each one. Therefore, in this article, I will explain how you can parse your website's /sitemap.xml with JavaScript to get a list of URLs you can then iterate over.

Valid sitemaps follow a standardized XML schema, so we can define a sequence of steps that will always work to extract a sitemap's URLs.

- Fetch the file from the server.

- Parse the XML

Stringinto a data structure to work with. - Query the parsed XML to get all

<loc>elements it contains. - Get the text content for each

<loc>element because these are the URLs.

Fetching /sitemap.xml

Before you can process your sitemap, you have to load it. We can use the asynchronous fetch() function, which is widely supported across browsers. Asynchronous means that the function returns a Promise, which, in this case, resolves to a Response object. To get the Response object itself, we have to use the await keyword, which blocks execution until Promise has been resolved.

let rawXMLString = await fetch("/sitemap.xml").then((response) => response.text());

However, we are not interested in the whole Response object; we only want the text of its HTTP response body. This can be achieved with the Response object's text() function, also an async function. To chain the two Promise objects, we can use the then() function of the first Promise object to map to the second one (i.e., the Promise object returned by text()). It's tricky to explain, but the following equivalent code should clarify it.

let response = await fetch("/sitemap.xml");

let rawXMLString = await response.text();

Parsing the XML String

Once we have the raw XML String, we must parse it. Modern browsers have a built-in DOMParser, which we can instantiate. The resulting object, in turn, exposes the parseFromString() function, which is precisely what we need — it converts a String of raw content and a mime type to an object that implements the Document interface.

let parsedDocument = (new DOMParser()).parseFromString(rawXMLString, "application/xml");

The Document interface is the same one implemented by the ubiquitous window.document object. Therefore, it provides all the DOM-related functionality you are used to if you are familiar with the window.document object.

Retrieving all <loc> elements

Since our parsedDocument implements the Document interface, we have the querySelectorAll() function at our disposal. This function evaluates the given CSS selector against the document it was called on and returns a collection of all elements that match the selector.

let locElements = parsedDocument.querySelectorAll("loc");

In our case, the selector is "loc" because we want to match all <loc> elements. The collection we get is an instance of NodeList, a special type representing lists of DOM elements.

Extracting URLs from <loc> elements

Finally, to iterate over the <loc> elements in the NodeList collection, we must call its values() function to get an ordinary iterator. Once we have an iterator, we can work with it as usual. Firstly, we can use its map() function to translate our <loc> elements to their textContent, which are the URLs we have been seeking to extract all along. Secondly, we use its toArray() function to collect the mapped elements into a plain old Array object.

let urls = locElements.values().map((e) => e.textContent.trim()).toArray();

To be on the safe side, I also trim() the URLs to remove any leading and trailing whitespace.

Putting everything together

To conclude, we can put all this together into one function:

async function extractPageURLsFromSitemapXML() {

let rawXMLString = await fetch("/sitemap.xml").then((response) => response.text());

let parsedDocument = (new DOMParser()).parseFromString(rawXMLString, "application/xml");

let locElements = parsedDocument.querySelectorAll("loc");

return locElements.values().map((e) => e.textContent.trim()).toArray();

}

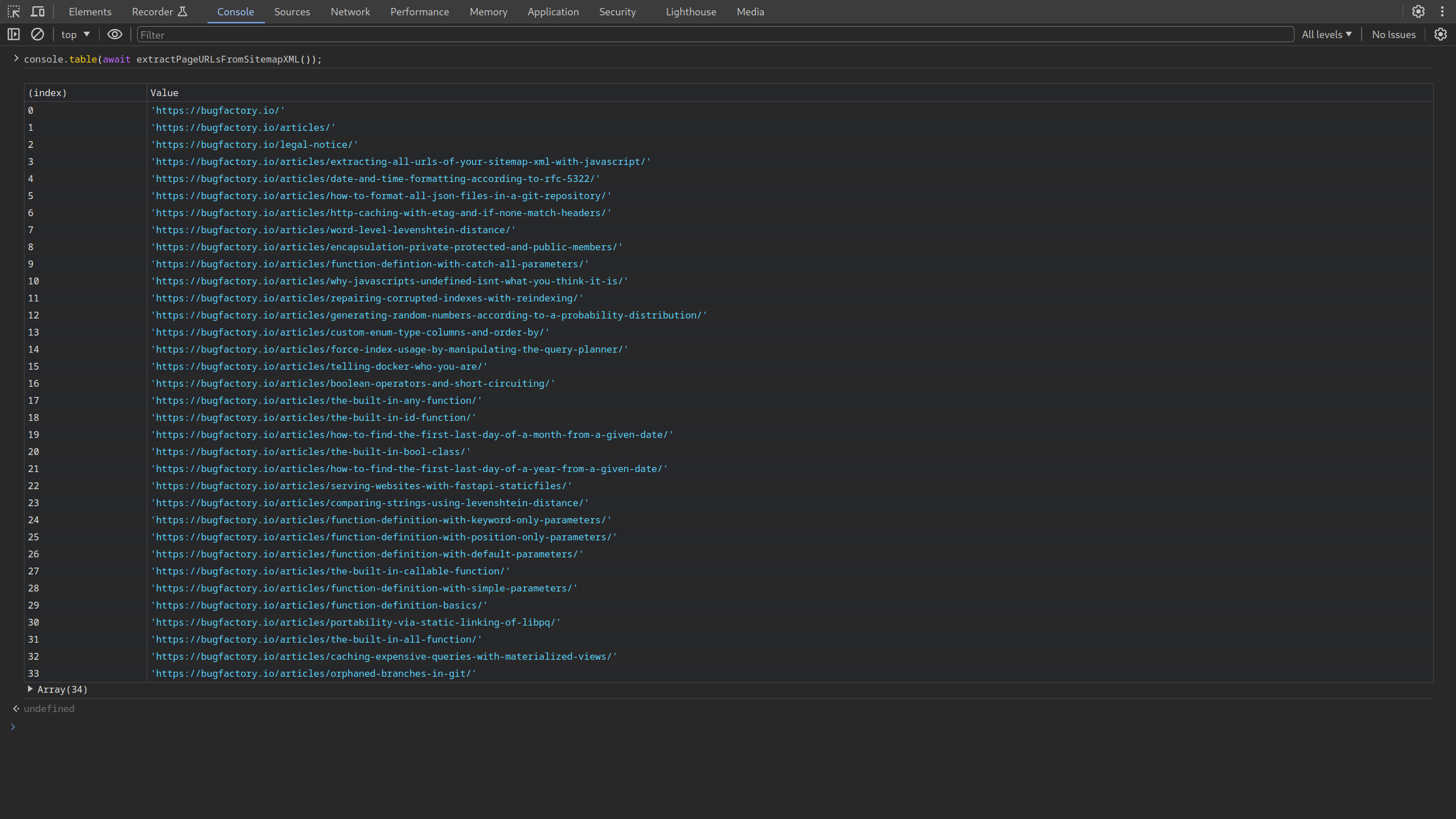

If you then call this function and log its output, you can get something like the following:

Generated with console.table(await extractPageURLsFromSitemapXML()); using the /sitemap.xml of bugfactory.io as input.

That's everything for today. Thank you for reading, and see you next time!